by Devin Asay

Brigham Young University

If you have ever tried to create stacks in a language other than English and the more common West European languages you have run into the problem of how to produce all the character representations that the language requires. Of course, this is a problem in any programming environment, not just in LiveCode. Fortunately, Unicode is there to help us out. The good news is that LiveCode handles Unicode seamlessly in nearly all circumstances. By learning just a few tips and tricks you can be on your way to multilingual bliss in no time!

Before we get into Unicode itself, we need to understand the basics of how characters are represented in computer memory and on the screen. As you know, at the most basic level computers are nothing more than very powerful number-crunching machines. The computer’s Central Processing Unit, or CPU, is composed of millions of tiny transistors, each of which can be set to one of two states--off or on. Because of this binary nature, CPU7s manipulate numbers using base 2, or binary, arithmetic; in other words, all numbers are represented by 0s and 1s. That means that any time you press a key on your keyboard you are ultimately sending a binary code to the computer’s CPU.

Each 0 or 1 stored in a computer’s memory is known as a bit. Computers handle and store data in chunks of 8 bits called bytes. The earliest micro-computers used 8-bit processors--CPUs that could process one 8-bit byte of data at a time. In the early days of computing, one bit in every byte was used for internal “housekeeping” purposes, leaving only 7 bits available for storing data. For these and other historical reasons, characters of text could only be represented by a maximum of 7 bits.

Because computers needed to be able to pass text data to one another, in the early 1960s the American Standards Association developed a standard character encoding system known as ASCII--the American Standard Code for Information Interchange. (See chart right.) Due to the 7-bit limitation, this standard only specified 128 (27) code points, numbered from 0 to 127. Upper and lower case Latin letters, numbers and common punctuation were assigned unique code points in this table. For instance, upper-case A is ASCII 64, the numeral 3 is ASCII 51, a space character is ASCII 32, and so forth. This standard is still widely used today and is 100% reliably consistent across all modern operating systems.

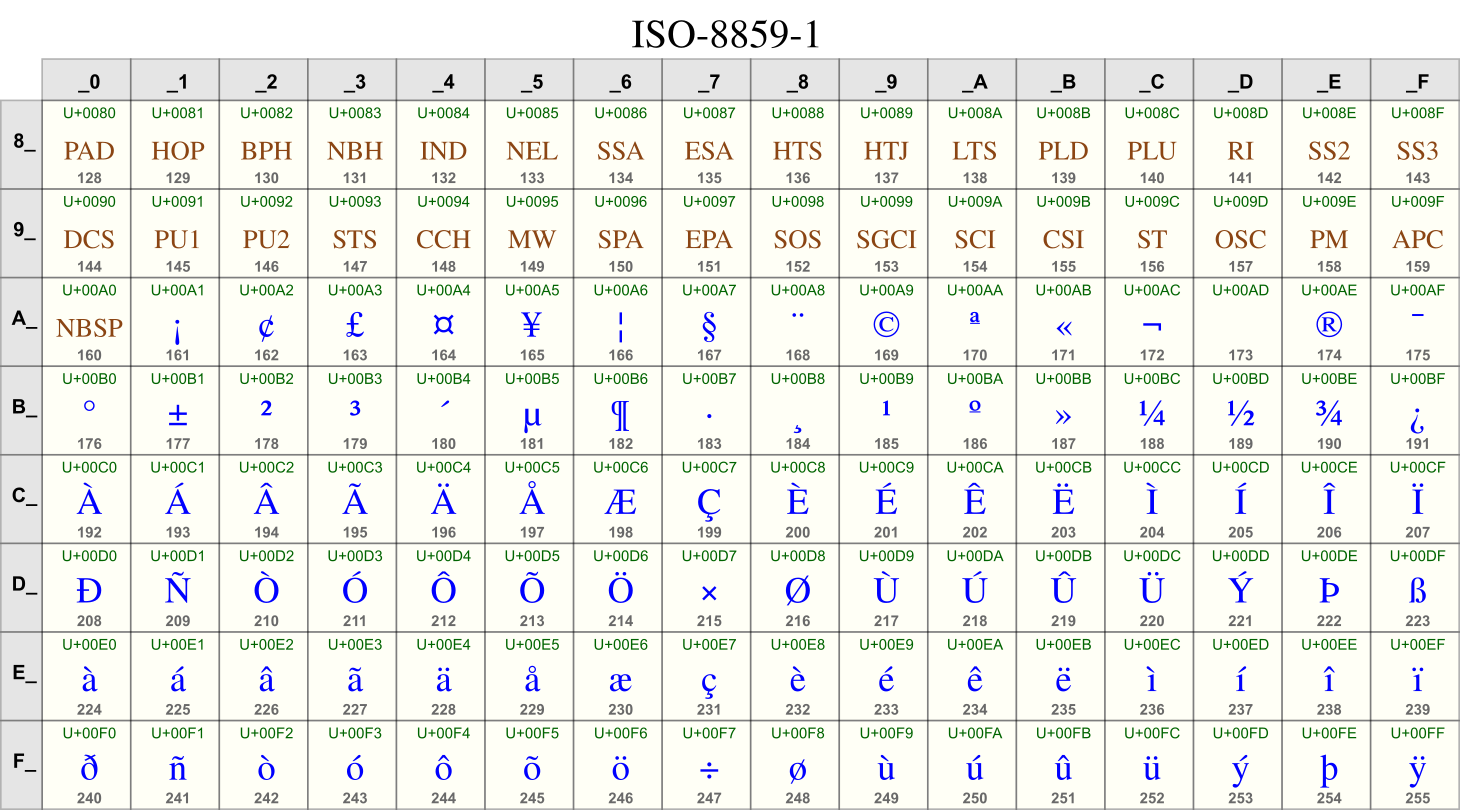

In the 1970s and 1980s several things happened that brought increasing complexity and inconsistency to the original ASCII standard. More efficient microprocessor designs freed up the 8th bit in the byte so that the size of the character encoding table could be doubled to 256 (28) code points. However, at the same time computer use outside of the U.S. and Europe skyrocketed, while intense competition emerged between various operating systems. This meant that scores of different uses for the upper 128 code points emerged. IBM, Apple Computer, and Microsoft each developed a standard character mapping for what is sometimes called “extended ASCII”. The Microsoft version became the most commonly used and became the basis for what is known as the ISO-8859-1 (Latin 1) character set. Apple’s extended ASCII is mapped to completely different characters and is known as Mac Roman. See below for examples of several variants of extended ASCII.

IBM Codepage 850 |

Windows Codepage 1252 |

ISO-8859-1 |

Mac Roman |

At the same time, people who needed to express non-Latin character sets created scores of fonts that mapped the upper 128 code points to other alphabets, such as Cyrillic, Hebrew, Greek, or East European Latin alphabets. To further confuse matters, character based writing systems such as Chinese and Japanese could not be expressed in only 256 code points, so systems were devised under which pairs of two ASCII characters were combined, which could be mapped to large character lookup tables that could handle the thousands of characters needed for these writing systems. As a result encoding schemes proliferated until there were dozens of different character encodings covering various writing systems.

During the 1980s and 1990s it was a common occurrence for people exchanging documents electronically internationally to find that what started as, say, Cyrillic text on one end came out as hopeless gobbledygook on the other end, because the recipient didn’t have a compatible character font. Even relatively common punctuation and typographic characters, such as European currency symbols, “curled” quotes, and dashes could be rendered incorrectly on the recipient’s system, due to incompatible encoding schemes between the sender’s and recipient’s systems.

In the 1980’s the Unicode consortium emerged to try to address this confusing situation. The character encoding standard that resulted is designed to provide a way to display all of the world’s languages by using a much larger character table of 1,114,112 code points. The goal in Unicode was to assign a unique code point to each character in all the world’s languages, with enough code points left over for characters that haven’t been invented yet. The Unicode consortium’s credo is:

Unicode provides a unique number for every character,

no matter what the platform,

no matter what the program,

no matter what the language.

The road to the Unicode utopia, however, has been long and arduous, and even today many of the most common computing applications rely on ASCII text for storage and transmission of text data. Fortunately for us, as of LiveCode v. 7, all text is stored and rendered using Unicode. That enables LiveCode to easily handle all of the world’s writing systems seamlessly.

In order to understand how to use Unicode text in LiveCode, it is important to be familiar with a few terms and concepts. Unlike ASCII, Unicode is much more than simply a collection of character codes. It also defines things like sort order, writing direction, when a character is represented by a specific glyph, and much more. The good news is that the LiveCode engine handles all of this complexity for us, so there aren’t that many extra scripting tokens for us to learn.

The old ASCII standard is simple and reliable. Because of the restricted technical and language environment in which it operates, there is a simple, one-to-one relationship between the character code points stored in your computer’s memory and the actual letter shapes drawn on your screen. However, Unicode requires much greater flexibility, because in many languages there is no simple correspondence between a single unit of text with a specific semantic identity and the way that unit is visually represented. In Unicode this difference is represented by the terms code point and glyph. A character or code point is an abstract concept, referring to the single semantic unit, while a glyph is the visual representation of a character in a given environment. For example, in certain languages, such as Arabic, the same character may be represented by different glyphs, depending on whether the character appears in isolation, or at the beginning, middle, or end of a word.

With this background we can finally talk about Unicode as it is implemented in LiveCode. Under the Unicode standard, there are several encoding systems, most notably UTF-8, UTF-16, and UTF-32. LiveCode uses the UTF-16 encoding internally for all text operations. However, LiveCode has the ability to transcode between UTF-16 and several other common encodings. Thus, the first Important Thing to Remember when using Unicode in LiveCode is:

1. Unicode in LiveCode is always UTF-16.

Another complication comes from the fact that different CPUs store sequences of bytes in different orders. Since Unicode characters typically are made up of two bytes each (often referred to as double-byte characters,) that means that the order in which Unicode characters are stored can be different when comparing, say, Power PC (PPC) processors with Intel processors. Motorola and PPC processors store the most significant byte first in the sequence and so are called “big endian”; Intel processors store the most significant byte last in the sequence and are called “little endian”.

Big endian: ![]()

Little endian: ![]()

The details of why and how this works aren’t important here, but what you need to know is Important Thing to Remember number 2:

2. LiveCode Uses the unicode byte order determined by the host processor.

What does this mean for you as a developer? Let’s consider the case of two users. User 1 works on an older Mac system that runs on a PPC processor. User 2 works in Windows on an Intel processor. If User 1 creates unicode text and saves it to a file and sends it to User 2, when user two tries to read the file it will come out scrambled because it came from a big-endian system. Even though it is possible to convert big-endian unicode to little-endian, it adds pain, complexity and uncertainty.

Having said all this, nowadays Intel processors constitute the overwhelming majority of computer CPUs, so conflicts between big- and little-endian encodings are more and more rare. Still, there could be differences in the way various programs encode their text output. See the section on Converting between character encodings below to find out how to avoid these problems.

This is a good place to start because it’s the easiest. LiveCode fields can handle Unicode text input without any intervention by the developer. That is because LiveCode simply uses the text input methods supplied by the host operating system. So if you want to type Japanese characters into a field, you simply select the Japanese text input system you want to use and start typing. LiveCode knows how to render it properly in the field, and it is then ready for use. Bottom line: if you want to learn how to select the text input method on your OS, see the help documentation for that OS.

You may be familiar with the rich collection of tools that LiveCode provides for working with text. Among them are two functions, codepointToNum() and numToCodepoint(), which allow us to work with the Unicode code point value for any character. They work like this (try it in LiveCode’s message box):

put codepointToNum("a") -- returns 97

put numToCodepoint(97) -- returns the letter 'a'In fact, you can use the numToCodepoint() function to create a rudimentary Unicode table. Just create a new field, name it "codepoints" and run this routine:

put empty into fld "codepoints"

repeat with i = 0 to 127

put i & tab & numToCodepoint(i) & cr after fld "codepoints"

end repeat

This routine will display the first 128 characters of Unicode block C0, Basic Latin, which corresponds to the original ASCII standard. If you replace 0 and 127 with 1024 and 1279 respectively, it will display the Unicode Cyrillic block.

When using Unicode text, especially if you are importing or exporting text from or to other systems or environments, you may need to convert your UTF-16 text to other encodings. The most common reason for doing this is reading and writing UTF-8 files. If you are planning to share text with others or send it over the internet, I recommend storing your Unicode text in UTF-8 format. UTF-8 is part of the Unicode standard, and is a reliable way to store Unicode text in an ASCII compliant format. UTF-8 is especially important for encoding Unicode text for use in web browsers and email.

The keys to using UTF-8 text in LiveCode are the textEncode() and textDecode() functions. Let’s say you’ve gotten some UTF-8 text from a web site and you want to display it in your LiveCode stack. You store it in a file called myUniText.ut8. This is how you would read it in:

put url ("binfile:/path/to/file/myUniText.ut8") into tRawTxt

put textDecode(tRawTxt,"UTF8") into field "myField"

Conversely, to save Unicode text from LiveCode to a UTF-8 file, use textEncode():

put textEncode(fld "myField","UTF8") into tEncText put tEncText into url "binfile:/path/to/file/myUniFile.ut8"

Here’s Important Thing to Remember number 3:

3. For reliably transporting Unicode text, convert it and store it as UTF-8 text.

This is a really easy one. As of LiveCode v. 7 all text in LiveCode is encoded as Unicode UTF-16. That includes button labels, scripts, ask and answer dialogs, custom properties, and stack names. In short, you don’t have to think about it, you just type it using whatever language you want and LiveCode can understand it.

LiveCode’s Unicode implementation of Unicode is quite robust and fairly complete, so working with international character sets in LiveCode is easier than ever before. If you master the basic concepts I’ve described here, and note the Important Things to Remember I have listed you will have the tools to produce LiveCode applications for virtually any language.

Gillam, Richard, Unicode Demystified. Addison-Wesley, 2003.

User Guide: Processing Text and Data. General information on text processing in LiveCode, including text encodings and Unicode.

Unicode Consortium Web Site, http://www.unicode.org.

A stack with all of the examples in this article, along with several others, can be accessed at http://livecode.byu.edu/unicode/CharEncodingInLC.livecode.